Researchers have unveiled a groundbreaking method to replicate an artificial intelligence (AI) model without needing to hack into the device it operates on. This innovative technique can be executed even by individuals lacking prior knowledge of the software or infrastructure supporting the AI, potentially leading to significant concerns regarding intellectual property rights and data security.

According to Aydin Aysu, an associate professor at North Carolina State University and co-author of the research, the value of AI models cannot be overstated. The development of these models demands substantial computing resources and expertise, making their protection imperative. When a model is stolen, it not only jeopardizes the original creators’ competitive edge but also exposes it to various attacks, as unauthorized users can investigate the model’s weaknesses.

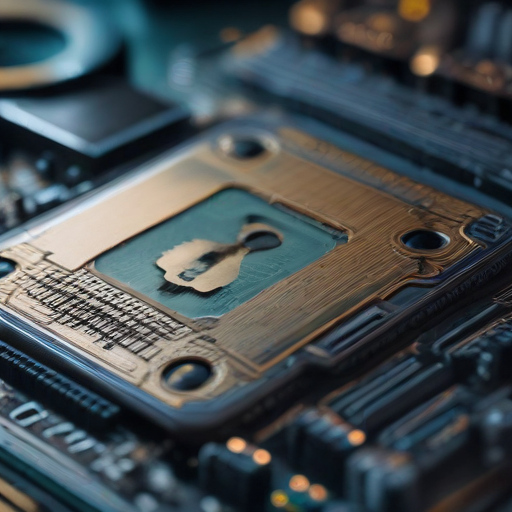

The principal investigator, Ashley Kurian, a Ph.D. student at NC State, emphasized that model theft poses threats to sensitive data and the intellectual property of developers. The researchers managed to extract essential hyperparameters from an AI model running on a Google Edge Tensor Processing Unit (TPU), allowing them to deduce its architecture and layer characteristics, which are vital for recreating the model.

This theft recognition was facilitated by utilizing a commercially available chip, the Google Edge TPU, which is commonly used in edge devices. Kurian explained that by monitoring electromagnetic signals emitted during AI processing, they could gain a “signature” of the AI’s operational behavior, which was integral in reverse-engineering the model.

The researchers deployed a novel technique to estimate the number of layers in the AI model they targeted, a vital component since a typical AI model consists of many layers (between 50 and 242). Instead of replicating the entire electromagnetic signature from the start, they meticulously worked layer by layer, relying on a database of previously recorded signatures to identify and recreate each section accurately.

Remarkably, their technique was able to reproduce the stolen AI model with an accuracy rate of 99.91%. This high level of precision underscores the potential risks involved with AI models, indicating that attackers could extract highly sensitive information easily.

Aysu remarked that having identified this significant vulnerability, the next crucial phase is to develop strategies to mitigate these risks. The research highlights the pressing need for countermeasures to protect against such sophisticated model-stealing attacks in the future.

This study being presented at the Conference on Cryptographic Hardware and Embedded Systems opens the door to deeper conversations about safeguarding AI technology, encouraging developers and organizations to strengthen their defenses. By addressing vulnerabilities head-on, the aim is not only to protect intellectual property but also to foster a more secure environment for the advancement of AI technologies.

In summary, while this new technique raises serious alarms about AI cybersecurity, it also provides an opportunity for developers to reevaluate security measures, paving the way for stronger protections and innovation in AI model deployment in the future.